For me, a lot of stress and anxiety about working efficiently comes from the momentary feelings of being ineffective. If I need to accomplish a work item, how long will it take to complete that work item? How many sub-tasks do I need to complete before I can complete the work item? How many of those do I understand and can do with a minimal amount of effort, and how many do I need to do research for before I can even begin implementing them? The more time, energy, and amount of knowledge that has to be gained to complete a work item, the more stressful it becomes to complete it.

So, I wanted to take a moment and start to break down those feelings into categories. I read Scott Hanselman’s Yak Shaving post years ago, and it has become a part of the shared language among the development teams I work with. Before reading that post, I had described the act of Yak Shaving as “speed bumps”; but I would have to explain it every time I used it. Hopefully, getting this written down can help me define a language so I can communicate this feeling more easily.

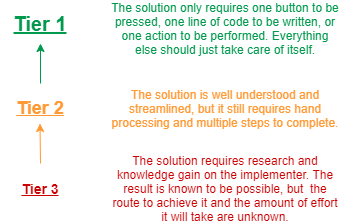

At the moment, the feeling of implementation efficiency can be broken down as:

Tier 3

This is when you need to implement something, but in order to do it you are going to need to learn a new technology stack or a new paradigm in order to complete it. The task you’re trying to complete could be something as trivial as adding Exception Handling to an application, but in order to do it, you’re going to research APM solutions, determine which best fits your needs and then implement the infrastructure and plumbing that will allow you to use the new tool.

An example of this might be your first usage of Azure Application Insights in an ASP.NET Core application. Microsoft has put in a tremendous amount of work to make it very easy to use, but you’ll still need to learn how to Create an Application Insights resource, add Application Insights into an ASP.NET Core application, re-evaluate if you created your Application Insights resource correctly to handle multiple environments, and then most likely reimplement it with Dev, Test, Prod in mind, determine what common parameters which are unique to your company should always be recorded, and then work with external teams to setup firewall rules, develop risk profiles and work through all the other details necessary to get a working solution.

Tier 3 is the most frustrating because you have learn so much yourself just to get to your end value. So, for me, it’s the one that I also feel the most nervous about taking on because it can feel like I’m being incredibly inefficient at doing so much work to produce something that feels so small.

Tier 2

This is when you already have all the knowledge of how to do something and you understand what configuration needs to take place, but you are going to have to do the configuration yourself. When you know at the beginning exactly how much work it will take to complete, there is a lot less frustration because you can rationalize the amount of time spent for the end value that’s achieved. The moment this becomes frustrating is when the extra work that you’re putting in is a form of Yak Shaving. For example, when you are dealing with a production issue, and you realize that you’re going to need to implement component X in order to get the necessary information in order to solve the problem, that’s the moment you heavily sigh because you realize the amount of hand work you’re going to have to put in place just to get component X working.

This level of efficient usually happens when your working on the second or third project that you’ve used a particular technology stack with. Let’s use Application Insights as the example again. You’ve probably already developed some scripts which can automatically create the Application Insights instances, and you’re comfortable installing the nuget packages that you need, but you still need to run those scripts by hand, and setup permissions by hand, and maybe even request firewall rules to be put in place. None of these tasks will really take up too much time, but it feels like wasted time because your not producing the real end value that you had in mind in the first place.

Tier 1

This is when the solution is not only well known to yourself, but your organization has developed the tooling and infrastructure to rigorously minimize the amount of time spent on implementing the solution. This doesn’t come cheap, but the peace of mind that comes with having an instantaneous solution to a problem are the moments that make work enjoyable. The ability to stumble upon a problem and think, “Oh, I can fix that”, and within moments you’re back to working on whatever you were originally doing creates a sense that any problem can be overcome. It removes the feeling that you’re slogging through mud with no end in sight, and instead that feeling is replaced with confidence that you can handle whatever is thrown at you.

It’s rare that you can get enough tooling and knowledge built up in an organization that Tier 1 can be achieved on a regular and on going basis. It requires constant improvement of work practices and investment into people’s knowledge, skillsets, and processes to align the tooling and capabilities of their environment with their needs.

When creating working environments, everyone starts out with a goal of creating a Tier 1 scenario. But, it seems pretty difficult to get there and maintain it.

This one of the pieces I can find very frustrating about security. There is a lot of information available about what could go wrong, and different risk scenarios, but their just isn’t a lot of premade tooling which can get you to a Tier 1 level of Implementation Efficiency. People are trying though: OWASP has the Glue Docker image, and Github automated security update scanner is fantastic, and NWebSec for ASP.NET Core is a step in the right direction. But, overall, their needs to be a better way to get security into that Tier 1 of Implementation Efficiency zone.